ETL and ELT are the two main ways data teams ingest, transform, and eventually expose their data to their stakeholders. They’re processes that have evolved in parallel with the development of modern cloud data warehouses and ETL tooling.

In either process, the letters in ETL/ELT stand for:

- E: Extract, when raw data is extracted from different data sources. These data sources often include backend databases, marketing and email CRMs, ad platforms, and more.

- L: Load, when data is loaded into a central data warehouse.

- T: Transform, when the raw data is modeled into a unified, meaningful, and standardized format.

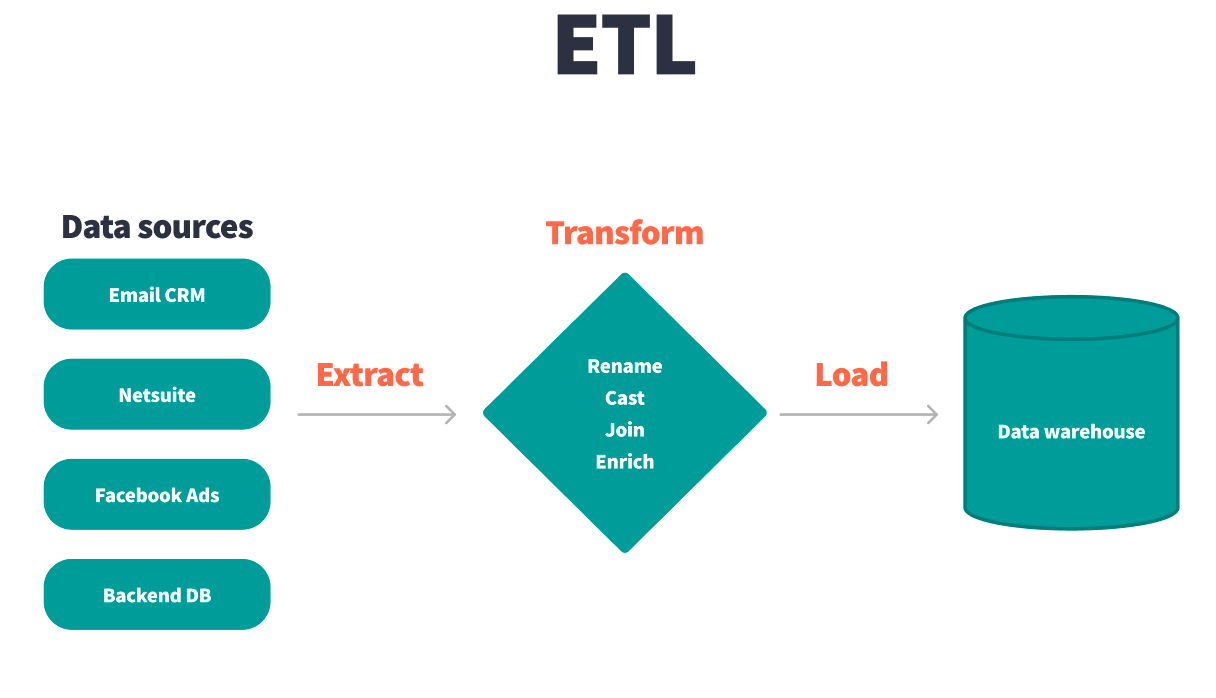

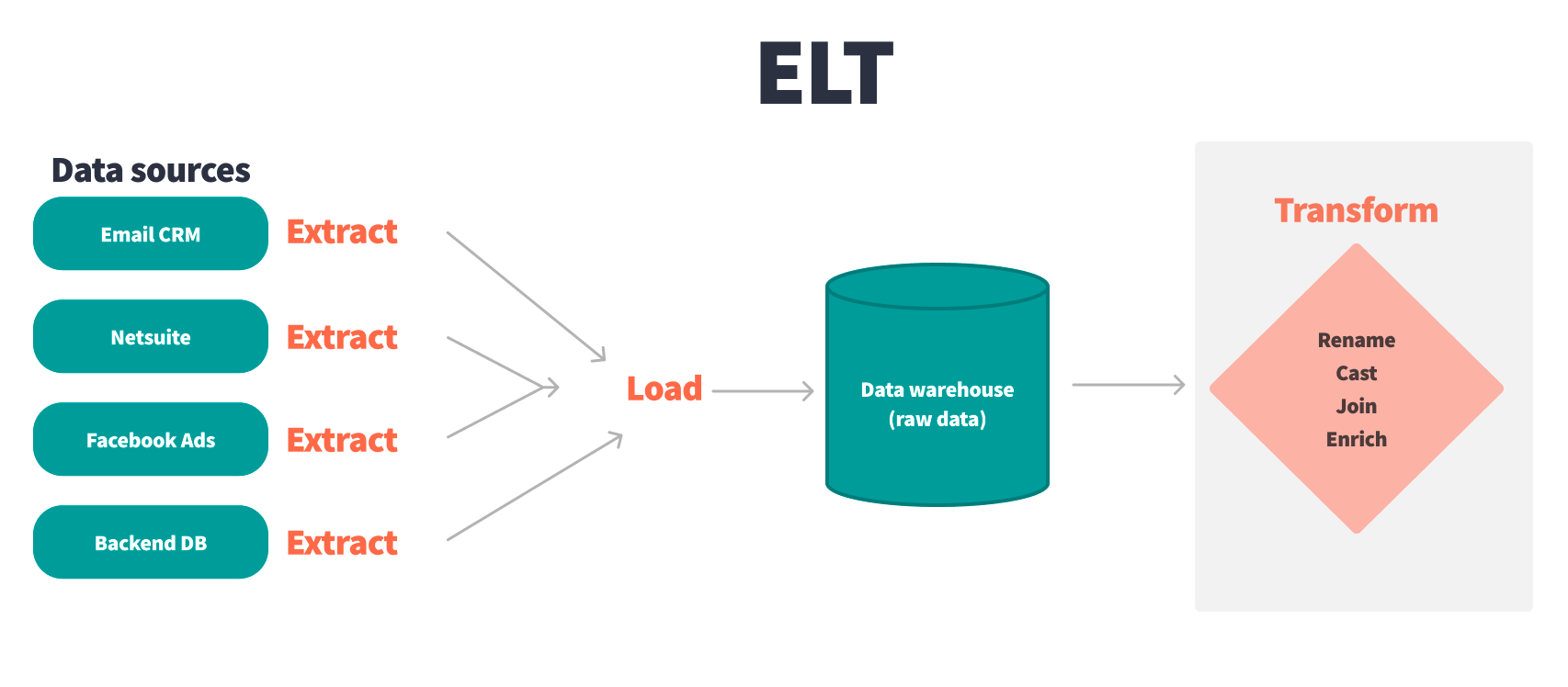

Where these two processes diverge is in the timing and location of the transformation layer. In short, during the ETL process, the transformation of raw data happens before it hits the data warehouse. In ELT processes, transformation occurs in the data warehouse after raw data has already been dropped off there; both raw and transformed data live in the data warehouse in this approach.

It’s not just semantics—the ordering of when and where transformation occurs plays a vital role in how data teams store, govern, standardize, model, and test their data. Use this page to understand how these two processes differ, the benefits and drawbacks of each option, and which approach might be best for you and your team.

ETL vs. ELT: A high-level overview

The primary difference between ETL and ELT is the when and where of transformation: whether it takes place before data is loaded into the data warehouse, or after it's stored. This ordering of transformation has considerable implications on:

- the technical skills required to implement the pipeline,

- the number and complexity of products needed to support each option,

- the skillset teams hire for and the structure of data teams themselves, and

- the preparedness and flexibility of a data stack.

Before we get into the nitty-gritty of those differences, let’s clearly define what ETL and ELT are, and why ELT has become a recent phenomenon in the analytics industry.

What is ETL?

ETL, or “Extract, Transform, Load”, is the process of first extracting data from a data source, transforming it, and then loading it into a target data warehouse. In ETL workflows, much of the meaningful data transformation occurs outside this primary pipeline in a downstream business intelligence (BI) platform.

In many ways, the ETL workflow could have been renamed the ETLT workflow, because a considerable portion of meaningful data transformations happen outside the data pipeline. The same transformations can occur in both ETL and ELT workflows, the primary difference is when (inside or outside the primary ETL workflow) and where the data is transformed (ETL platform/BI tool/data warehouse).

What is ELT?

Extract, Load, Transform (ELT) is the process of first extracting data from different data sources, then loading it into a target data warehouse, and finally transforming it.

ELT has emerged as a paradigm for how to manage information flows in a modern data warehouse. This represents a fundamental shift from how data previously was handled when ETL was the data workflow most companies implemented.

Transitioning from ETL to ELT means that you no longer have to capture your transformations during the initial loading of the data into your data warehouse. Rather, you are able to load all of your data, then build transformations on top of it. Data teams report that the ELT workflow has several advantages over the traditional ETL workflow which we’ll go over in the section below.

Core differences and similarities between ETL and ELT

You may read other articles or technical documents that use ETL and ELT interchangeably. On paper, the only difference is the order in which the T and the L appear. However, this mere switching of letters drastically changes the way data exists in and flows through a business’s system.

In both processes, data from different data sources is extracted in similar ways. However, data is directly loaded into the target warehouse before transformations occur in ELT workflows. Now, both raw and transformed data can live in a data warehouse. In ELT workflows, data practitioners have the flexibility to model the data after they’ve had the opportunity to explore and analyze the raw data. ETL workflows can be more constraining since transformations happen immediately after extraction.

Below, we break down some of the other major differences between the two:

| ELT | ETL | |

|---|---|---|

| Programming skills required | Often requires little to no code to extract and load data into your data warehouse. SQL is the primary power behind transformations. | Often requires custom scripts or considerable data engineering lift to extract and transform data prior to load. Python, Scala, and SQL are often used to transform the data itself. |

| Separation of concerns | Extraction, load, and transformation layers can be explicitly separated out by different products. | ETL processes are often encapsulated in one product. |

| Distribution of transformations | Since transformations take place last, there is greater flexibility in the modeling process. Worry first about getting your data in one place, then you have time to explore the data to understand the best way to transform it. | Because transformation occurs before data is loaded into the target location, teams must conduct thorough work prior to make sure data is transformed properly. Heavy transformations often take place downstream in the BI layer. |

| Data team roles | ELT workflows empower data team members who know SQL to create their own extraction and loading pipelines and transformations | ETL workflows often require teams with greater technical skill to create and maintain pipelines |

ELT ETL Programming skills required Often requires little to no code to extract and load data into your data warehouse. SQL is the primary power behind transformations. Often requires custom scripts or considerable data engineering lift to extract and transform data prior to load. Python, Scala, and SQL are often used to transform the data itself. Separation of concerns Extraction, load, and transformation layers can be explicitly separated out by different products. ETL processes are often encapsulated in one product. Distribution of transformations Since transformations take place last, there is greater flexibility in the modeling process. Worry first about getting your data in one place, then you have time to explore the data to understand the best way to transform it. Because transformation occurs before data is loaded into the target location, teams must conduct thorough work prior to make sure data is transformed properly. Heavy transformations often take place downstream in the BI layer. Data team roles ELT workflows empower data team members who know SQL to create their own extraction and loading pipelines and transformations ETL workflows often require teams with greater technical skill to create and maintain pipelines

How ELT evolved the modern analytics space

Why has ELT adoption grown so quickly in recent years? A few reasons:

- The abundance of cheap cloud storage with modern data warehouses. The creation of modern data warehouses such Redshift and Snowflake has made it so teams of all sizes can store and scale their data at a more efficient cost. This was a huge enabler for the ELT workflow.

- The development of low-code or no-code data extractors and loaders. Products that require little technical expertise such as Fivetran and Stitch, which can extract data from many data sources and load it into many different data warehouses, have helped lower the barrier of entry to the ELT workflow. Data teams can now relieve some of the data engineering lift needed to extract data and create complex transformations.

- A true code-based, version-controlled transformation layer with the development of dbt. Prior to the development of dbt, there was no singular transformation layer product. dbt helps data analysts apply software engineering best practices (version control, CI/CD, and testing) to data transformation, ultimately allowing for anyone who knows SQL to be a part of the ELT process.

- Increased compatibility between ELT layers and technology in recent years. With the expansion of extraction, loading, and transformation layers that integrate closely together and with cloud storage, the ELT workflow has never been more accessible. For example, Fivetran creates and maintains dbt packages to help write dbt transformations for the data sources they connect to.

So what’s the right choice for you?

You’ve read this article, reflected on the wins and struggles of your current data stack, and have finally come to the golden question: which process is best for you, your data team, and your business?

Below, we’ve broken down the key considerations you should take when you’re thinking about how you want to empower your team and business with data, and how the ELT/ELT processes factor into that approach.

How important are the following to you?

- Version controlling your data transformations

- Storing raw and modeled data in a unified location

- Automatically testing and documenting your data

- Democratizing who is involved in data pipeline work—do you want to bring data analysts into the fold of data transformation and remove the liability of complex data pipelines from data engineers?

- Governing and standardizing of core business metrics

If these things feel vital to you and the health of your data, team, and business, consider learning more about how dbt, the tooling encouraging data practitioners to embody the best practices of software engineering, is transforming the modern analytics space.

Last modified on: Mar 21, 2024

Achieve a 194% ROI with dbt Cloud. Access the Total Economic Impact™️ study to learn how. Download now ›