Data quality testing: Where and why you should have it

Mar 24, 2023

Data teams hold a lot of responsibility—is the data they're providing to end users reliable? Are their questions being answered with the data? Did I double-check that there weren’t any duplicate rows in that table?

The stress of data quality is very real; it’s what keeps data analysts up at night. When data quality is questioned, business users don’t trust the data (or the data team) and start conducting ad hoc analyses and creating their own metrics—forming the wild west of data analysis and reporting.

Instead of waiting for data quality issues to seep into your business intelligence (BI) tool and eventually be caught by a stakeholder, the practice of analytics engineering believes data quality testing should be proactive—integrated throughout the data transformation process. Data should be tested in its raw format once it hits your data warehouse, rigorously tested as its transformation and business logic is added to it, before it’s moved into production (and exposed to end users), and throughout its lifecycle as new data is added and updated.

This is the mindset that software engineers use to build systems for high reliability and why many data practitioners are evolving to embrace this mindset shift.

What is data quality testing?

Data quality testing is the practice of making assertions about your data, and then testing whether these assertions are valid. This concept can be used to test both the quality of your raw source data and to validate that the code in your data transformations is working as intended.

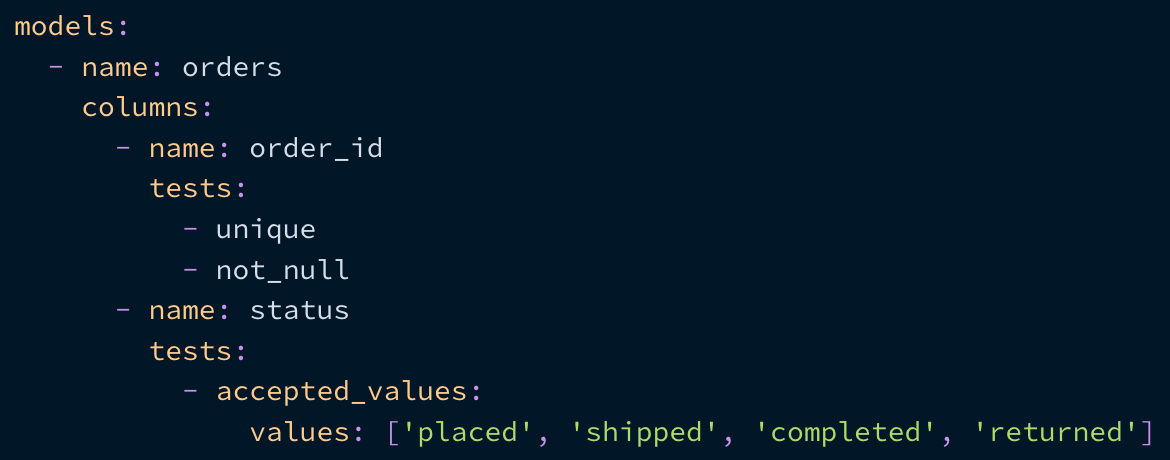

In dbt, data tests are defined in easy-to-read YAML—when you run a test, dbt compiles this configuration to a query, runs it against your database, and returns results (and prints a big red FAIL message!) if your assertion turns out to be false. Integrated, code-based, and version-controlled tests are one of the things users like best about dbt.

Why is data quality testing important?

Having your data meet the quality standards for your organization is the foundation for any analytics team to develop trust with their end business users. With high data quality, your data team ensures:

- Business users can make the best data-informed decisions

- That they can be trusted for reporting, and

- Big data audits become less scary

When data quality is high—and trust in the data team is high—there’s this almost indescribable flow state that exists for data teams.

But how are you regularly guaranteeing to your end users that the data you're exposing in a BI tool is accurate and reliable? Where is there accountability built into your data pipeline to hold the data team to these standards?

The answer: code-based and automated data quality tests.

What data quality tests should you be running?

The data quality tests you run will be guided by the general data quality dimensions of accuracy, validity, completeness, freshness, and consistency. Data tests in the analytics world typically cover these dimensions across two concepts:

- Correctness: Are key columns unique and non-null? Do column values match your expectations? Are columns in the correct format? Are there concerning duplicate rows?

- Freshness: How recently was raw data updated? If it wasn’t, how do I alert someone about this? Is the data available to me on the cadence I need?

These two heuristics and the tests that form them are the foundation for how modern data teams are approaching data quality testing. Below, we’ll unpack where exactly these tests of correctness and freshness should be implemented in your data pipelines.

Where and when should you test your data?

There are regular points in time at which you’ll want to test your data for correctness and freshness. During development, you’ll be testing for data quality as well as testing data transformation logic.

When you push new analytics code to production you’ll want to run your tests to make sure that your new code isn’t breaking anything in the existing code base. And then once your code is in production, you’ll want to have automated tests running at regular intervals to make sure that everything in your ETL pipeline and transformation logic is still working as expected.

1. During development

When you’re creating new data transformations for new data sources and business entities, you’ll want to integrate testing through this development cycle. In general, you’ll likely find yourself testing both your raw source data and new transformations.

Raw source data

With your raw data, you’re really checking the original quality of your data and asking yourself, “How much work am I really going to need to do here?” 😉 Here, you’ll likely find yourself creating tests checking for:

- Primary key uniqueness and non-nullness

- Column values meeting your basic assumptions

- Duplicate rows

At this stage, you can rely on dbt generic tests to help you easily test your source data. With dbt, you can also add source freshness tests, which allow you to regularly check that source data is being updated via an ETL tool and alert you when your data’s freshness falls below your expectations.

Data transformations

When you transform data, you’re cleaning, aggregating, joining, and implementing business logic to raw data. As you weave in more data transformations, fan out joins, and create new metrics and dimensions with SQL and Python, the room for error grows. In these newly formed tables, you’re going to want to test that:

- Primary keys are unique and non-null

- Row counts are correct

- Duplicate rows are not introduced in joins

- Relationships between upstream and downstream dependencies line up with your expectations

This is not an exhaustive list of what you might test after your data is transformed—this shouldn’t be an exhaustive list. How you test data, and how your team defines high-quality data, is dependent on your raw data and the needs of your business.

However, the list above is a great place to start building your foundation of data quality. You can leverage simple relationship, expression, and recency tests in dbt for these types of checks.

2. During pull requests

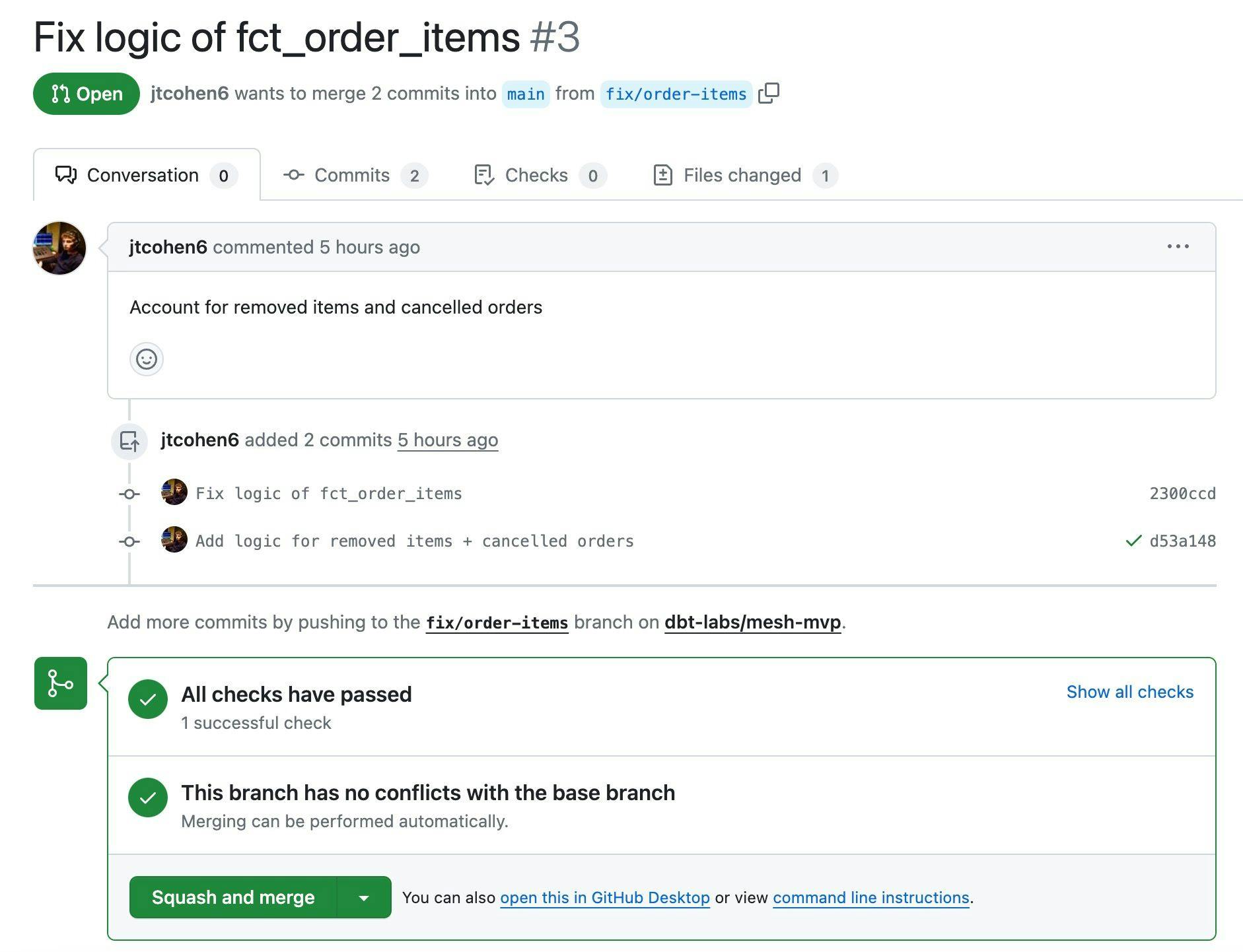

Before merging data transformation changes into your analytics code base, test your data. We use dbt Cloud to do this, so whenever a pull request is made against our internal analytics dbt project, dbt Cloud will kick off a job that builds and tests all models in your development branch in a scratch schema. If any of the models or tests fail, then dbt Cloud puts a big red X on the pull request. If everything works as intended, then dbt Cloud shows a nice green check mark.

The contextualized test success/failures help guide code review and act as a last check before the code gets merged into production. In practice, it looks like this:

By having an additional guardrail when you use a git-based data transformation tool, you welcome in other data team members into your work; other peers can review your code changes, help you debug errors, and ultimately build out an analytics foundation that is created with transparency and of higher quality.

However you manage the process, it’s vital to ensure that new data models and data transformation code is never entering your code base without being tested against your standards and peer-reviewed.

3. In production

Once your data transformations and tests are built and merged into your main production branch, you want to run them on a regularly scheduled cadence. Why? After your data model is created, things will happen to it over time:

- An engineer will push a new feature into production that changes your source data

- A business user will add a new field in the CRM, breaking the business logic of your data transformation

- Your ETL pipeline will experience an issue, pushing duplicate or missing data into your warehouse

- Etc, etc.

Automated tests ensure that when something changes about your business or the data, you—not your end user—are the first to know. We use dbt Cloud to regularly run automated tests on our data models. If any of the tests fail, dbt Cloud sends us email and Slack notifications, so we can investigate and resolve the issues in a timely manner.

Other popular tools for running data tests in production include Airflow, automation servers like GitLab CI/CD or CodeBuild, or scheduling cron jobs (read more about the pro’s and con’s of these different options here).

Building a foundation for data quality

To sum it all up:

- Data quality testing should be throughout your data pipelines, integrated in a way that’s seamless and accessible.

- Data should be tested in its raw format, transformed shape, and throughout its lifecycle.

- Data practitioners should proactively test data to build trust between data teams and their end users.

To level-set, these things don’t happen overnight; building out a data stack that is high-quality, regularly tested, and organized takes time, effort, and tooling.

However, there is new software, such as dbt, that’s making data testing a standard in the analytics workflow, providing built-in testing capabilities, and encouraging data teams to embrace software engineering best practices.

You can learn more about dbt and how it can help your team change the way you think about data quality testing here!

Last modified on: Mar 25, 2024

Achieve a 194% ROI with dbt Cloud. Access the Total Economic Impact™️ study to learn how. Download now ›