The hard part of becoming the largest solar provider in the nation

Sunrun is the largest residential solar company in the United States, servicing more than 750,000 customers nationwide and growing fast.

“Data drives efficient growth. In order to grow correctly, maintain all of our inventory, retain employees, execute a very aggressive sales strategy, and report and forecast effectively, we need to have our data in order,” said Jared Stout, Head of Data Management at Sunrun.

After acquiring Vivint Solar in 2020, the team was faced with the expected but no less daunting challenge of merging vastly different data structures.

Unifying data development post-acquisition

One of the main difficulties was various people developing and merging code at the same time.

Vivint Solar was using homemade python scripts to manage data transformations within their data warehouse. Sunrun was using the composer feature on Google Cloud and Airflow to transform and schedule their data. Across both companies, “people didn't know how we were building things,” explained James Sorensen, Senior Data Engineer. “They didn't know the code, they didn't know the lineage, and they didn't know how things were moving and transforming through our different stages of development.”

Each approach was less than efficient in their own right, both were inaccessible to anyone without python skills, and in any event, the two ways of work were incompatible with one another. They needed to standardize, with a more accessible and reliable way of work.

With code conflict management top-of-mind, the team searched for a solution that would allow them to:

- See model dependency

- Troubleshoot and run up and downstream models in the DAG

- Provide an isolated sandbox for individuals to make changes, separate from the whole team.

Choosing dbt Cloud

The Sunrun team had heard about dbt, and hoped that it could solve the issues they faced. They started a self-managed trial of the open source offering, dbt Core, just to ensure the workflow felt right for their business. However, Jed, the data team lead, quickly realized that managing an open source project that required command line proficiency, might still be limiting to members of the data team they hoped to activate for transformation work. So, he turned to dbt Cloud. With dbt Cloud, analysts could use the same development pattern as the central BI team and create their own data products.

“The number one reason why we switched from the open source offering to dbt Cloud was the ability to more meaningfully collaborate across data teams and with business stakeholders,” said Jared. “Sure, we could have paired dbt Core with Airflow to add things like testing and version control—for the small number of folks that have python and command line skills. Quality would be up, but we wouldn't be moving fast enough for anyone to care. Instead, we have 40 analysts self-serving via the IDE, and business stakeholders answering their own questions with human-readable documentation.”

Setting up the foundation

Migrating existing models into dbt

With dbt Cloud in place, the question now was, how much work would be involved? And how long would the migration process take? Turns out, way less than expected. “Us building out two complete data warehouses in two different platforms within a year and a half speaks volumes about the kind of velocity that dbt allowed us to achieve. Once the models were built out, moving them to a different platform was, for the most part, a pretty simple process…just porting over the code and making a few tweaks and syntactical changes that were necessary,” said Jared.

Automating code deployment for SOX compliance

With their data models in dbt, the BI team’s first priority was to eliminate manual complexity to reduce opportunity for error and speed time to production—a challenge when it comes to deploying code at a publicly traded company under the strict controls of SOX compliance.

SOX compliance requires a rigorous checklist: testing, code review and approval, independent sign-off, system lockdown, and a history of all included checklist items—all things that can block automation efforts. “dbt Cloud enables all of the above by having a place where we can easily target code executions for only files edited. It keeps a history of the success or failure of jobs and more importantly, tests,” explained Jared.

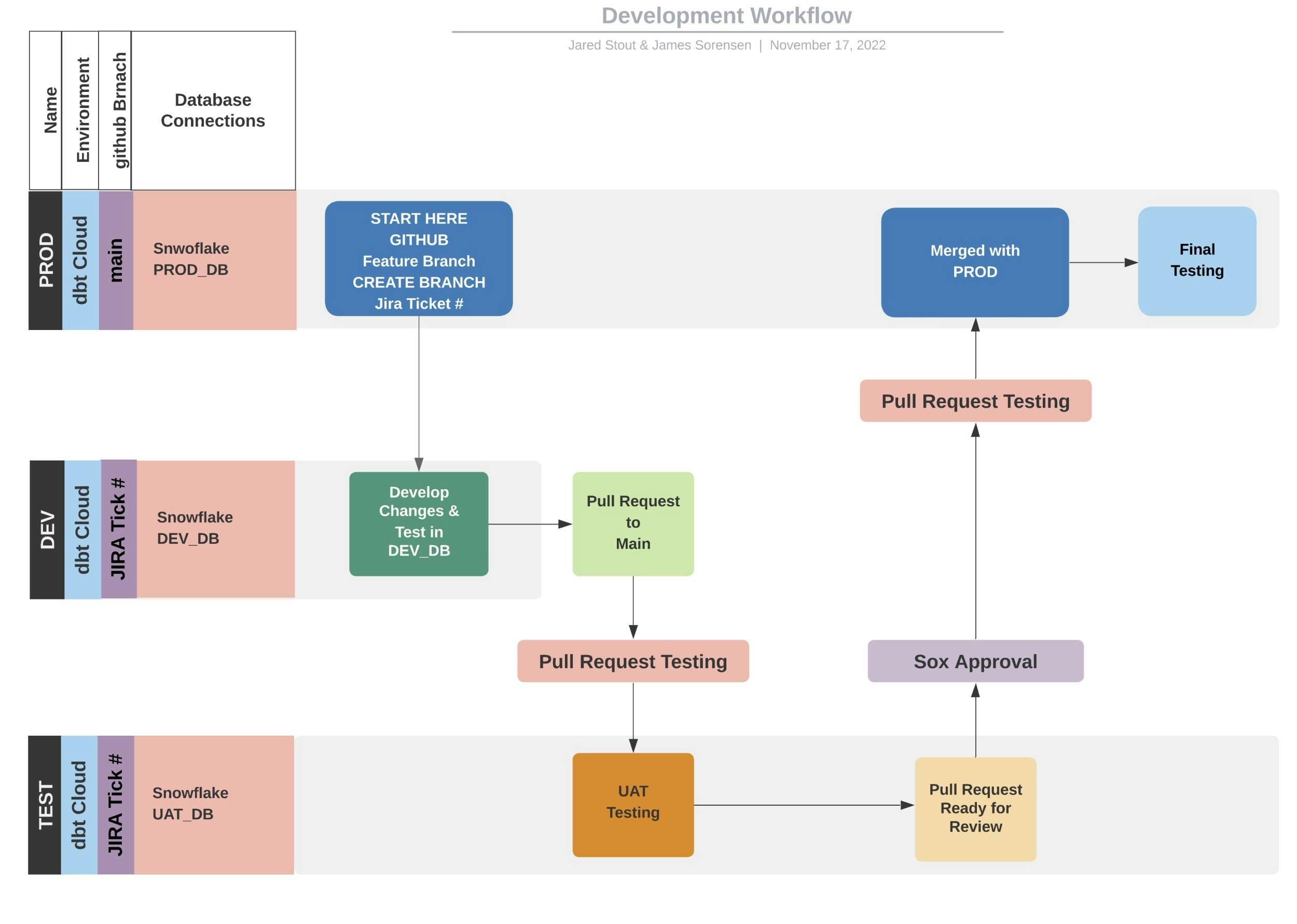

James and Jared created a GitHub Actions workflow that utilized Sunrun’s CI/CD tools: dbt for transformation and model generation layer, Jira for ticketing, and Github for code repository. “We're able to essentially create Git workflows and actions that check all of the Jira tickets to make sure they're in compliance with requirements and run the dbt models in the database, making sure they succeed before we allow the tickets to be deployed and go out into production.”

The combination of dbt’s version control and Jira and Github’s peer review process allows Sunrun to automate deployment while remaining SOX compliant. “Our deployment has sped up significantly—up to 75%,” said Jared. “We've become so dependent on the automation we've built and the ease of use that dbt Cloud offers that it’s hard to imagine ever going back to manual deployment.”

Speeding up data development

With dbt Cloud in place, the data team increased their velocity and data quality simultaneously.

Leveraging the power of macros

To keep up with the growing data scale, the team needed to develop and iterate quickly. "For me, the aha moment was when I realized the power of macros and how you could reproduce code automatically and dynamically generate SQL based on a macro that you defined,” recounted James. Using reusable chunks of code the team had already written and tested unlocked a level of velocity that was previously inaccessible.

Speeding Q&A with automated data lineage and documentation

Before dbt, troubleshooting was a tedious process requiring cascades of data engineering tickets. James recounted a scenario he faced often: “I’d spend an entire afternoon hunting down a broken link in the chain somewhere and trying to figure out what was going on that caused a report error. It was up to me to iterate through the code, going from file to file, from view to table, and table to view back and forth to see the dependencies and lineage.”

The lack of visibility led to constant questions like "what's the source data for this dashboard?" "Why did this number change from last month?" "How are you calculating this?" James recalled, “I remember working with the collections department on this one specific Salesforce field…” They had several fields that did the same thing, with slight, poorly-defined differences that the collections team used separately and therefore didn't know where their data was coming from within Salesforce.

“Those types of questions come up all the time and they're legitimate questions that should be answered,” said James. “So having dbt’s auto-documentation, having the ease of use of everything being in one spot, helps business units self-serve answers. If somebody has a question about whether something's up to date, dbt makes it really quick and easy to just check the refresh jobs and make sure that everything is running as expected.”

At Sunrun, self-service not only involves building reports in a BI tool but also understanding where the data is coming from, the lineage of the source of that data… even how it’s calculated. “The documentation and dbt status is really worth its weight in gold and saves everybody time,” emphasized Jared.

Upleveling data quality with testing

To further reduce time spent troubleshooting, the Sunrun team leveraged dbt's four out-of-the-box generic tests: not null, unique, accepted values, and relationships. “So far, we applied a standard set of tests across all of our models so nothing is built or delivered to stakeholders without having at least one level of testing on the table’s primary keys,” said Jared.

The BI team is working towards expanding test coverage for alerts and notifications throughout the company when data quality is not up to par. “dbt tests add a ton of value with the clarity and quality they enable. As we talk to different business units and deploy dbt Cloud in their areas, they definitely see the vision there of ‘wow with one or two lines of code, I can make sure my data is quality before it even gets to the stakeholder’ which is huge,” emphasized Jared.

Uniting data across the business

Documentation as a hub for collaboration

As dbt adoption spreads across the business, the BI team foresees documentation becoming a centralized place where anyone can gather information about the data contents and relationships within their warehouse. “Defining our data in a centralized place will be a central line of discussion in our governance committee meetings where we discuss what data means, how it's defined, how it's calculated… all of these things are an opportunity to use dbt documentation as a discussion hub in finalizing those decisions,” said Jared.

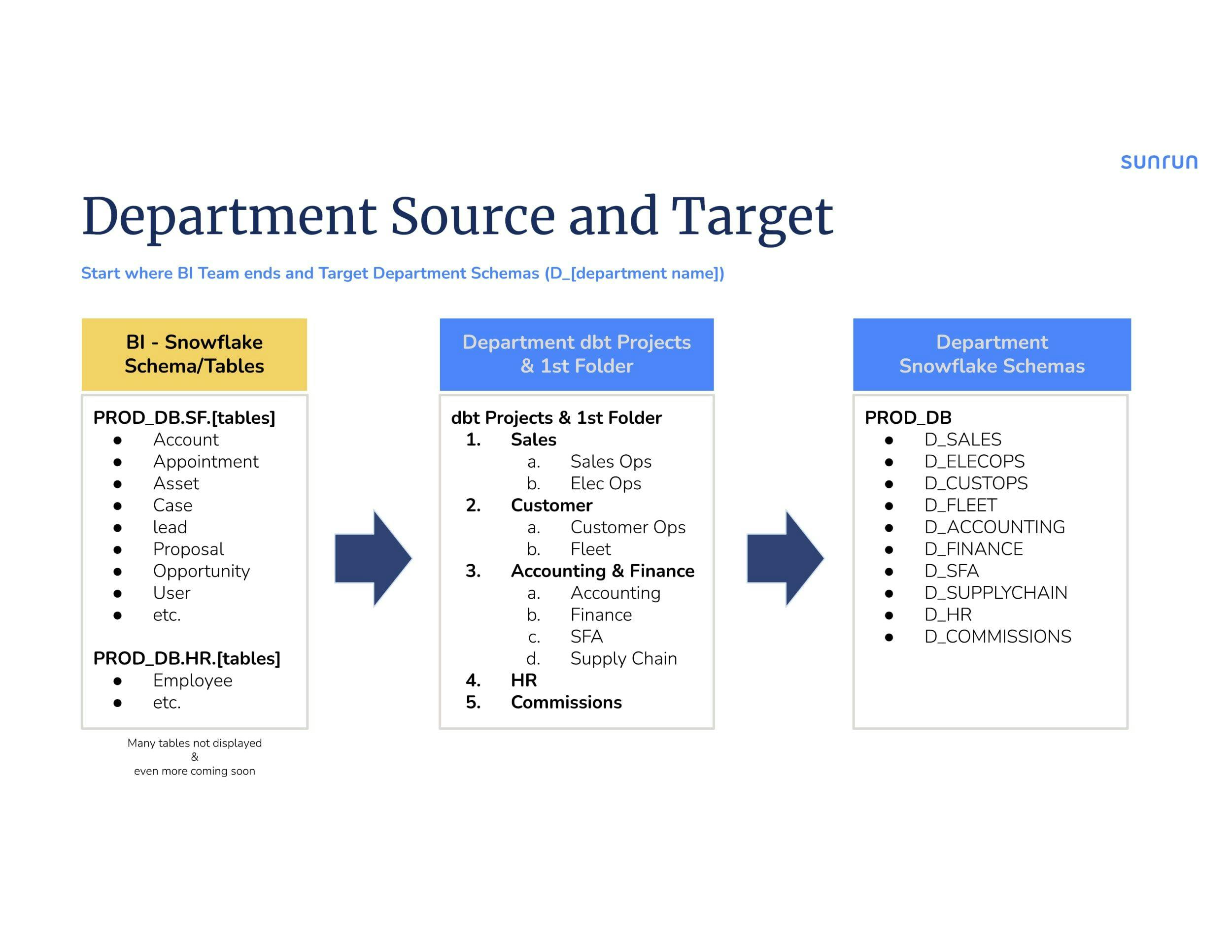

The last mile: empowering analysts at Sunrun

Those definitions start with the central BI team, but their “customers”—analysts embedded across business units—complete the data modeling process. “Our vision is to be the centralized place to get data, and then other business units would be the users who take it the last mile. Using the same system, they can give their stakeholders the same type of data dictionaries and the same documentation from a dbt standpoint so everybody's working on the same platform,” said Jared.

The workflow

- The business intelligence team first ingests everything.

- They standardize the data, the field names, and the data types—flattening or otherwise preparing the data from an engineering standpoint.

- Finally, they add the definitions and pieces relevant across the whole business.

From there, the data is handed off to embedded analysts across business units who prepare the data for useful insights within their department’s context.

- Senior analysts across electric operations, customer service, sales, install operations, commissions, accounting & finance, performance, etc., customize mappings only relevant to their departments, import spreadsheets to the data warehouse, and add their “special sauce”

- With their completely modeled data, analysts then use their data to create Tableau reports for their stakeholders.

For example, the performance team ingests IOT data that is created by Sunrun’s solar panels—the data about how much electricity they’re generating—and reports it back to the business. Additionally, they port individual installation performance to customers via their website portal. “It’s a huge data set that’s critical to ensure our panels are efficient and provide value to the customer. And it’s up to the analysts on the team to work with the dataset’s unique complexities and model appropriately to generate useful insights,” explained Jared.

Ramping analysts of every skill set quickly

The BI team is measuring initial success through adoption metrics, and the results are impressive. “We set up half a dozen GitHub repositories by departments and we check on their activity every week: we’ll pull up Github and show them the trends on adoption and collaboration, and most business units are on an upwards trajectory,” said Jared. More people committing their code to GitHub means greater dbt adoption and momentum towards quality, collaborative, governed data. “We have 50 dbt Cloud user licenses right now, at 100% utilization, which speaks to how easy it’s been for analysts to ramp. dbt’s inline web interface is very good, and the IDE enables exactly what we need the business to do. They’re excited to get into dbt, and there’s a queue of non-senior analysts wanting access too,” said Jared.

Coming up: the BI team’s roadmap

Sunrun’s BI team is continuing to make progress on the foundations of their dbt implementation: locking down and applying additional tests, onboarding new data sources and business units, building out documentation, and ensuring that documentation is widely available to anybody in the business.

For example:

With their central BI team to embedded analysts to business stakeholders model, Sunrun is also keeping collaboration top of mind. “We're in the process of building a dbt development community with regular meetings to share code, talk about techniques, and really build a cohesive place where people can share information and prevent developing in siloes,” said James.

“We're on the edge of our seats to see where dbt is going and what further additions are coming up that we can take advantage of” added Jared. “There’s so much opportunity to improve, and we’re just getting started.”