Polyglot pipelines: why dbt and Python were always meant to be

Sep 12, 2022

Product

Over five years ago now, I wrote Why doesn’t dbt support Python?.

Even one year into the dbt journey, it was clear to us that SQL wasn’t the only language that could/should productively be used by analytics engineers to transform data. We ran into the need for non-SQL capabilities in our consulting work and increasingly fielded the question from the dbt Community. As much as we wanted to (and this was the subject of many many hours of debate!), we just didn’t see a way at the time to create the same type of magical dbt experience in other languages.

dbt has always tried to make building production-grade data pipelines accessible to any data practitioner. Most practitioners don’t want to think about where data sits, where compute happens, or how to manage environments. The SQL interface has historically been the best abstraction to make all of this transparent. Write SQL and the database just makes it work.

Non-SQL data infrastructure has historically been missing three specific things to be able to provide this level of abstraction:

- Data locality. dbt believes that code should come to the data, not the other way around. Moving data is tremendously inefficient and costly. Historically, data movement was required in and out of SQL-based environments to execute non-SQL workloads.

- Client/server model. Data practitioners don’t generally want to manage environment config—they want to write code that just works. Having a client/server model makes this easy, and cloud offerings make this even easier. Python development has historically depended on practitioners managing their own local environments.

- Interactivity. Even when you can execute Python remotely, doing so has typically not been interactive. Instead, you find yourself pushing code, submitting jobs, etc. This is a totally foreign experience to someone used to the interactivity of SQL.

My goal in writing the original post was to say: we’re excited to support languages beyond SQL once they meet the same bar for user experience that SQL provides today. And over the past five years, that’s happened.

- Snowflake launched Python support in Snowpark, extending its traditional SQL capabilities.

- BigQuery released a Spark connector, allowing BQ users to process data natively in both SQL and Python.

- Databricks launched Databricks SQL analytics and now provides both SQL and Python interfaces to the same data.

These three big announcements made us think “now is the time!” While each one of these products presents a slightly different set of technical capabilities and user experiences, they all now hit the bar for the experience we were looking for:

- Each of these products allows users to reference the same data in both SQL and non-SQL workloads without moving it.

- Each of these products completely abstracts away the task of environment management via a fully cloud-native solution.

- Each of these products provides the APIs that dbt needs in order to power a native dbt development experience.

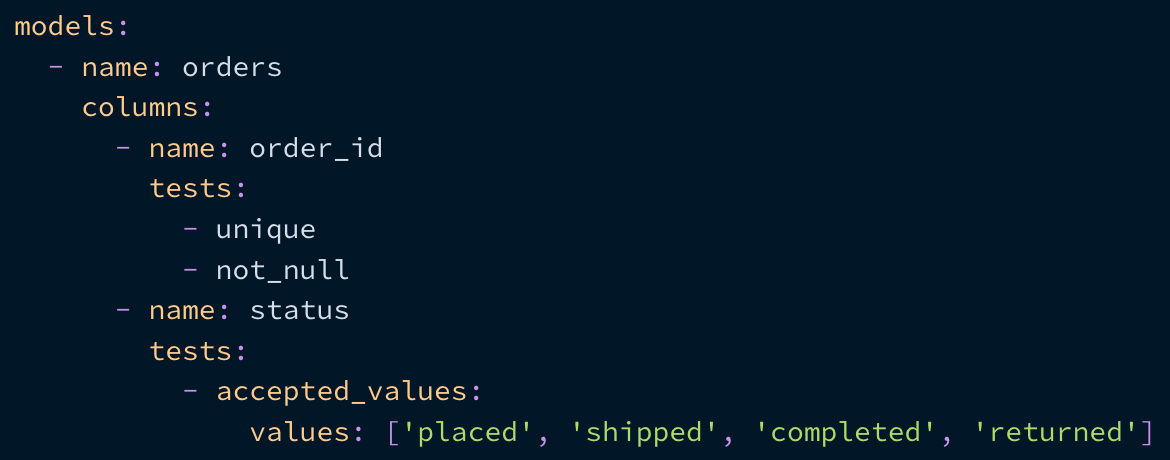

Over the past few quarters, we’ve rolled up our sleeves and gotten to work, and I’m so, so excited about the experience we’ve been able to create for dbt users. As all great experiences should be, it feels so simple that you almost don’t even notice it’s there: just create a .py file, reference some other models, and return a data frame!

There, you just created your first Python model 😁. Just as with SQL, dbt templatizes all of the boilerplate code required and leaves your business logic concise, clean, and readable. 🧑🍳

dbt has always been and will always be opinionated about how analytics engineering work is done. But it’s not religious about exactly what language should be used to express data transformations. I hope you'll try out dbt Python models (currently in beta with dbt Core v1.3), join the active discussions on how to make them useful, and help us continue to finetune this new functionality. I’m incredibly excited to ship this work and see what you do with it! There will be entirely new best practices to discover and write about, and I can’t wait to participate in the conversation as it unfolds.

Last modified on: Jun 03, 2024

The playbook for building scalable analytics

Learn how industry-leading data teams build scalable analytics programs that drive impact. Download the Data Leaders eBook today.

Set your organization up for success. Read the business case guide to accelerate time to value with dbt Cloud.